- cross-posted to:

- hackernews@derp.foo

- cross-posted to:

- hackernews@derp.foo

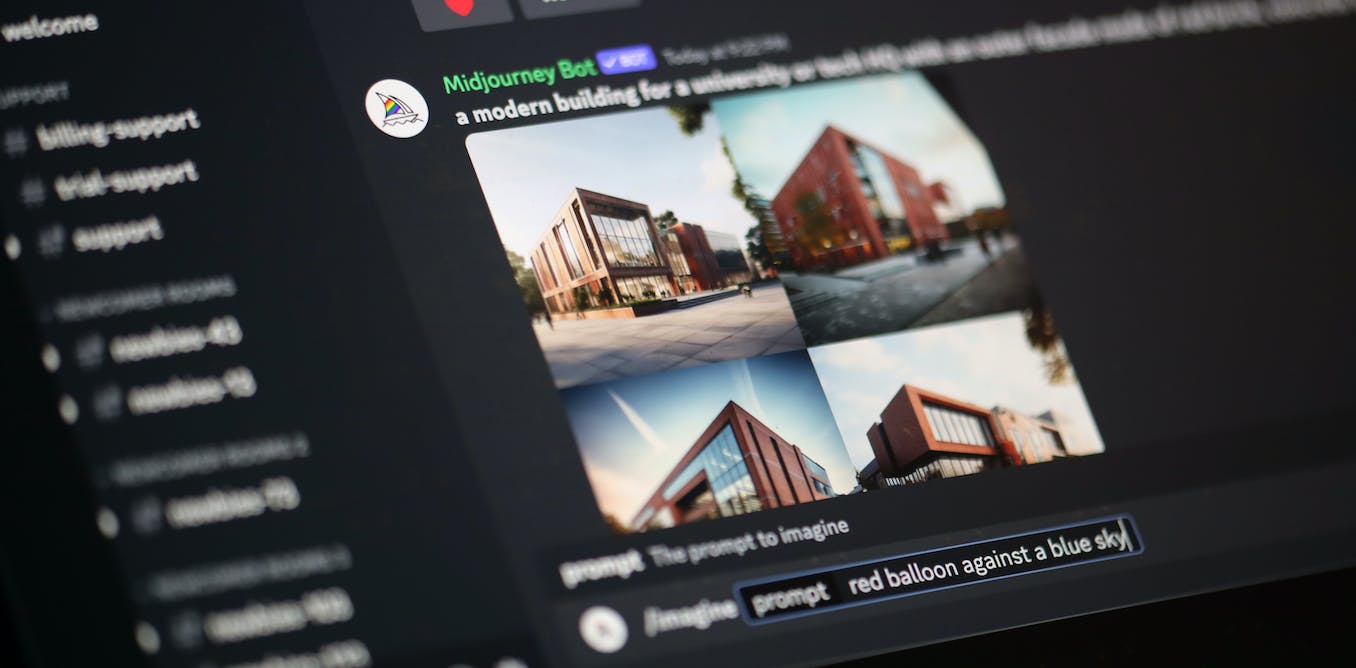

Data poisoning: how artists are sabotaging AI to take revenge on image generators::As AI developers indiscriminately suck up online content to train their models, artists are seeking ways to fight back.

That’s simply not how AI works, if you look inside the models after training, you will not see a shred of the original training data. Just a bunch of numbers and weights.

If the individual images are so unimportant then it won’t be a problem to only train it on images you have the rights to.

They do have the rights because this falls under fair use, It doesn’t matter if a picture is copyrighted as long as the outcome is transformative.

I’m sure you know something the Valve lawyers don’t.

| Just a bunch of numbers and weights

I agree with your sentiment, but it’s not just that the data is encoded as a model, but it’s extremely lossy. Compression, encoding, digital photography, etc is just turning pictures into different numbers to be processed by some math machine. It’s the fact that a huge amount of information is actually lost during training, intentionally, that makes a huge difference. If it was just compression, it would be a gaming changing piece of tech for other reasons. YouTube would be using it today, but it is not good at keeping the original data from the training.

Rant not really for you, but in case someone else nitpicks in the future :)